Apple and Google’s accessibility features in their mobile software can help people of all abilities get more out of their devices.

Smartphones have become increasingly useful for people with a variety of physical abilities, thanks to tools such as screen readers and adjustable text sizes.

Smartphones settings Easier to Use for Everyone

Even more accessibility features have been introduced or upgraded with the recent releases of Apple’s iOS 16 and Google’s Android 13, including improved live transcription tools and apps that use artificial intelligence to identify objects. When enabled, your phone can send you a visual alert when a baby is crying, or a sound alert when you’re about to walk through a door.

Many old and new accessibility tools make it easier for everyone to use the phone. Here’s a walkthrough.

Getting Started

To find all of the tools and features available on an iOS or Android phone, open the Settings app and select Accessibility. Allow yourself time to explore and experiment.

Both Apple and Google have dedicated Accessibility sections on their websites, but keep in mind that your exact features will vary depending on your software version and phone model.

Both Apple and Google have dedicated Accessibility sections on their websites, but keep in mind that your exact features will vary depending on your software version and phone model.

Alternative Methods of Navigation

Swiping and tapping by hand to navigate a phone’s features isn’t for everyone, but iOS and Android offer a variety of ways to navigate through screens and menus, including quick-tap shortcuts and gestures to perform tasks.

These controls (along with Apple’s Assistive Touch tools and the Back Tap function, which performs assigned actions when you tap the back of the phone) can be found in the iOS Touch settings.

Similar options are available in Android’s accessibility shortcuts. One method is to open the main Settings icon, then select System, then Gestures and System Navigation.

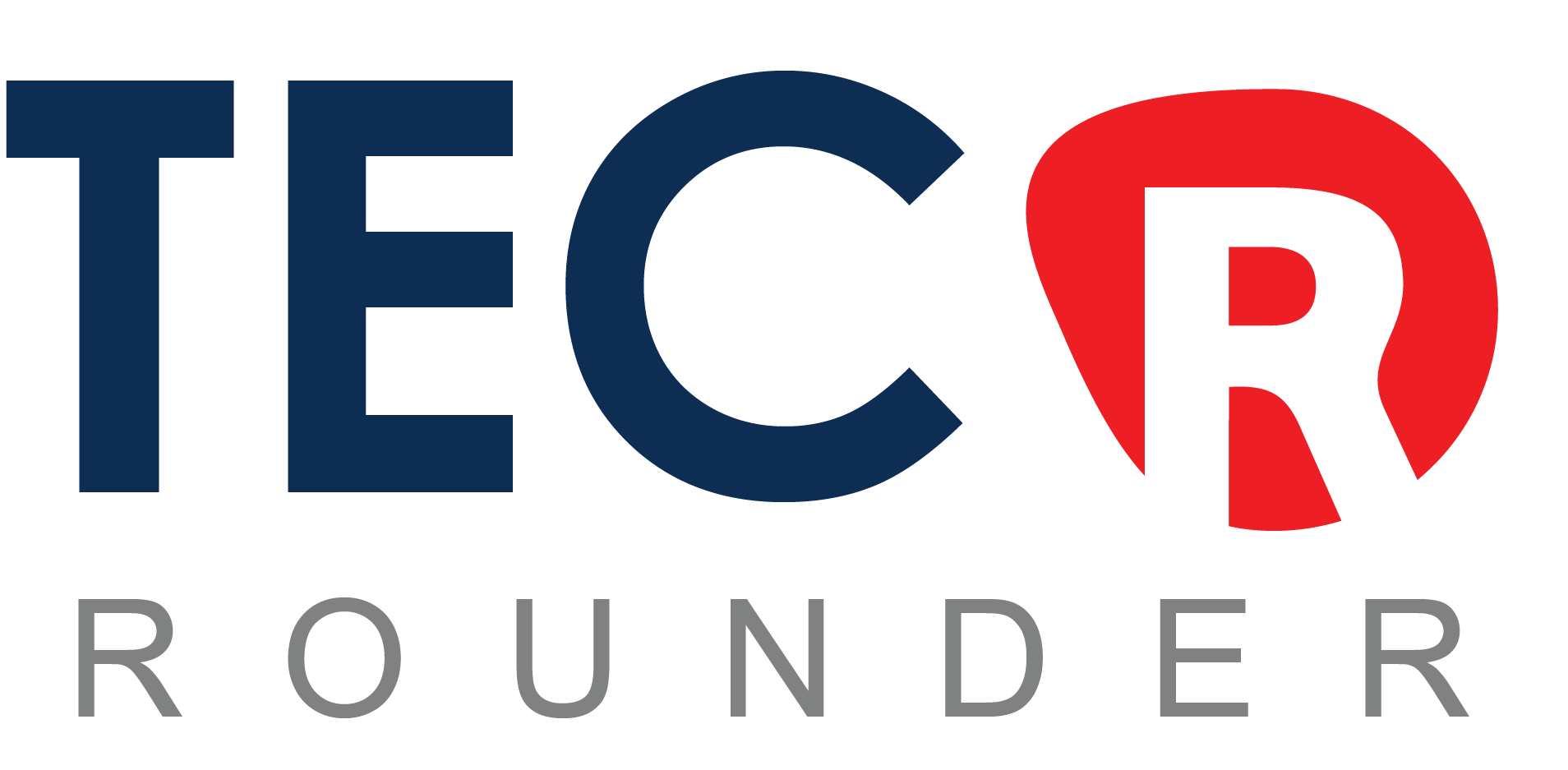

Both platforms enable navigation via third-party adaptive devices such as Bluetooth controllers or by using the camera to recognize facial expressions associated with actions, such as looking to the left to swipe left. These devices and actions can be configured in the Switch Control and Head Tracking settings on iOS, as well as in Google’s Camera Switches and Project Activate apps for Android.

Apple and Google provide a number of tools for those who are unable to see the screen. The VoiceOver feature is available in Apple’s iOS software, and Android has a similar tool called TalkBack, which provides audio descriptions of what’s on your screen (such as your battery level) as you move your finger around.

Apple and Google provide a number of tools for those who are unable to see the screen. The VoiceOver feature is available in Apple’s iOS software, and Android has a similar tool called TalkBack, which provides audio descriptions of what’s on your screen (such as your battery level) as you move your finger around.

When you enable iOS Voice Control or Android Voice Access, you can control your phone with spoken commands. Enabling iOS Spoken Content or Android’s Select to Speak setting causes the phone to read aloud what’s on the screen — useful for audio-based proofreading.

Don’t overlook some tried-and-true methods of interacting with your phone without using your hands. With spoken commands, Apple’s Siri and Google Assistant can open apps and perform actions. In addition, Apple’s Dictation feature (in the iOS Keyboard settings) and Google’s Voice Typing function allow you to dictate text.

Visual Assistance

iOS and Android include shortcuts to zoom in on specific areas of the phone screen in their Accessibility settings. However, if you want larger, bolder text and other display adjustments, open the Settings icon, select Accessibility, and then Display & Text Size. Go to Settings, then Accessibility, and then Display Size and Text in Android.

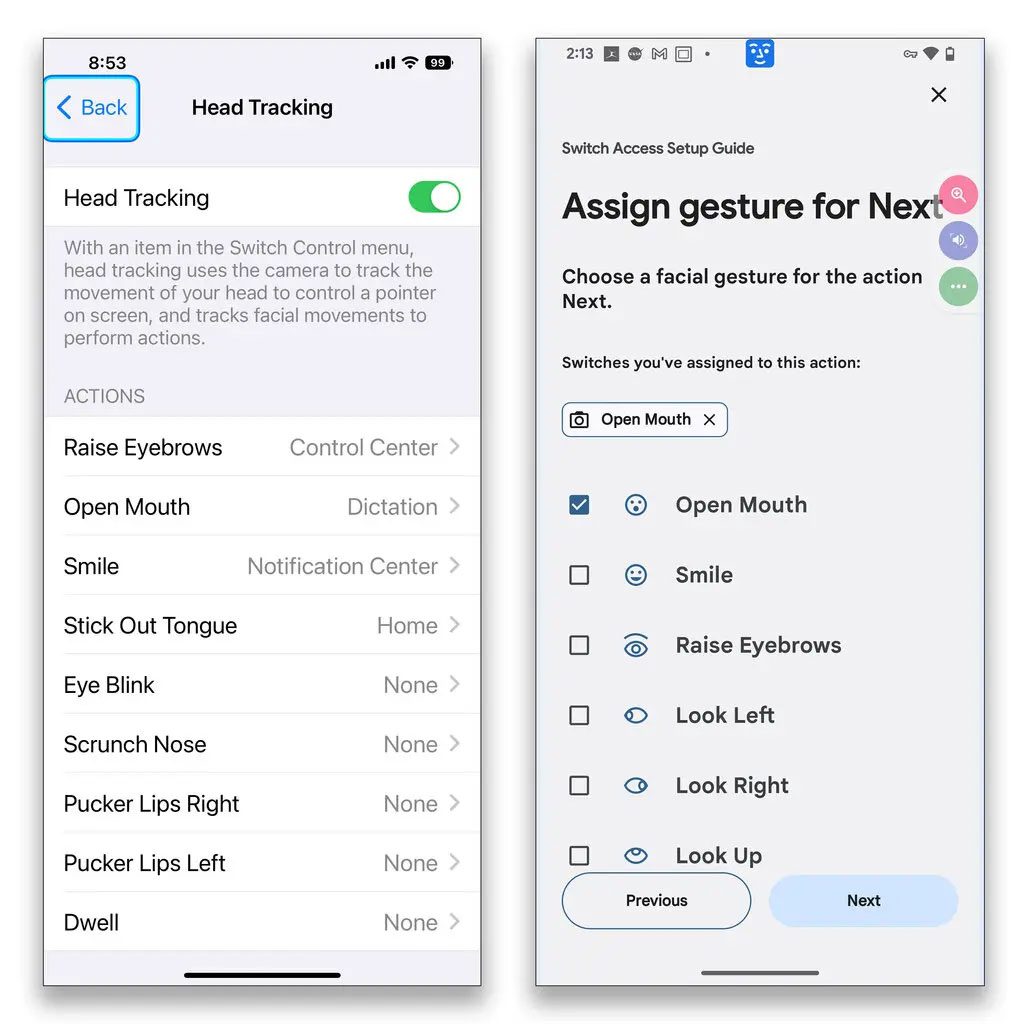

In iOS 16, Apple’s digital magnifying glass for enlarging objects in the camera’s view, the Magnifier app, has been updated. The new features of the app are intended to assist people who are blind or have low vision in using their iPhones to detect doors and people nearby, as well as identify and describe objects and surroundings.

The results of Magnifier are spoken aloud or displayed in large type on the iPhone’s screen. The door-and-people detection app calculates distance using the device’s LiDAR (light detection and ranging) scanner and requires an iPhone 12 or later.

To customize your settings, open the Magnifier app and tap the Settings icon in the lower left corner; if you can’t find the app on your phone, you can get it for free from the App Store. The Magnifier is one of many vision tools in iOS, and the company’s website includes instructions for installing the app on the iPhone and iPad.

To customize your settings, open the Magnifier app and tap the Settings icon in the lower left corner; if you can’t find the app on your phone, you can get it for free from the App Store. The Magnifier is one of many vision tools in iOS, and the company’s website includes instructions for installing the app on the iPhone and iPad.

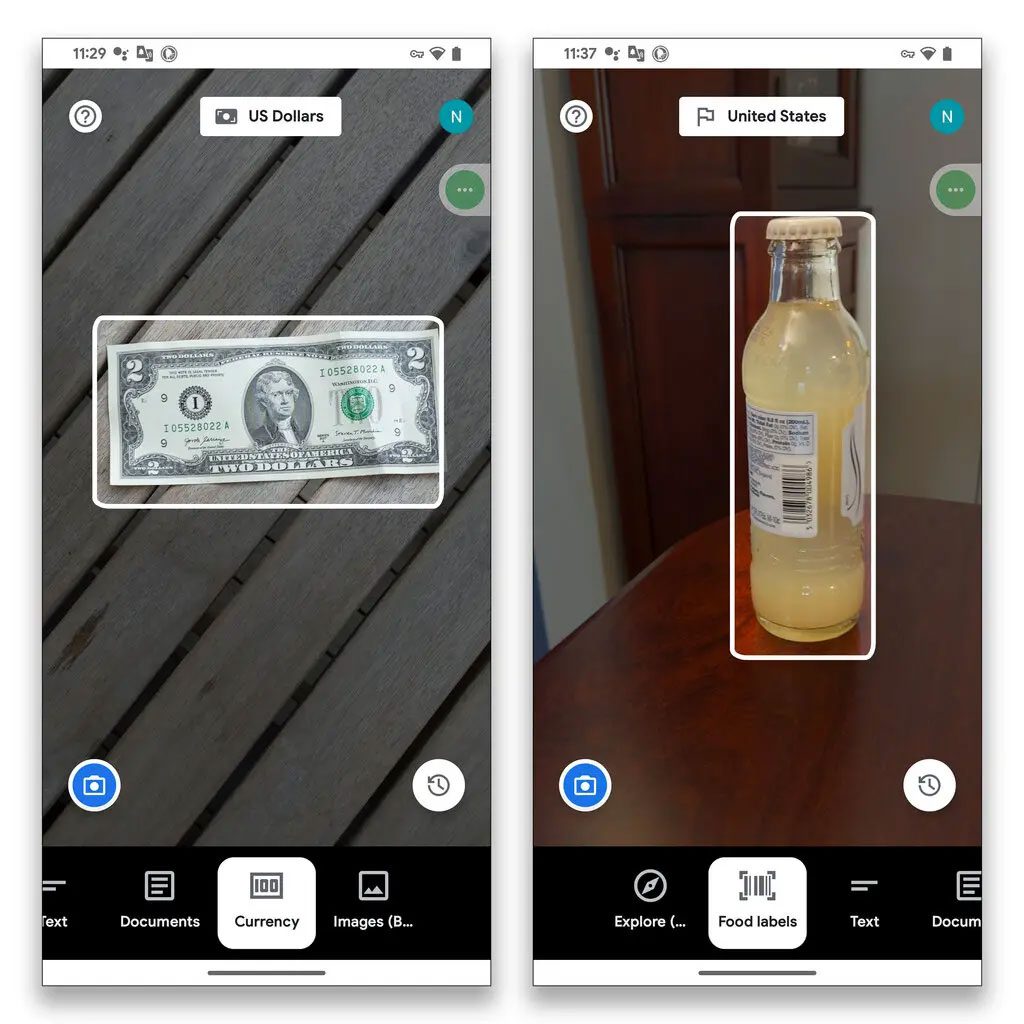

Google’s recently updated Lookout assisted-vision app (available for free in the Play store) can recognize currency, text, food labels, objects, and other objects. Lookout was introduced by Google in 2018, and it is compatible with Android 6 and later.

Google’s recently updated Lookout assisted-vision app (available for free in the Play store) can recognize currency, text, food labels, objects, and other objects. Lookout was introduced by Google in 2018, and it is compatible with Android 6 and later.

Auditory Aids

Both platforms allow you to use your headphones to amplify the speech around you. Headphone Accommodations can be found in the Audio/Visual section of iOS, and Sound Amplifier can be found in Android.

Apple includes Live Captions, a real-time transcription feature that converts audible dialogue around you into text onscreen, with the iOS 16 update. The Live Caption setting in Android’s Accessibility toolbox automatically captions videos, podcasts, video calls, and other audio media playing on your phone.

Apple includes Live Captions, a real-time transcription feature that converts audible dialogue around you into text onscreen, with the iOS 16 update. The Live Caption setting in Android’s Accessibility toolbox automatically captions videos, podcasts, video calls, and other audio media playing on your phone.

The free Live Transcribe & Notification Android app from Google converts nearby speech to onscreen text and can also provide visual alerts when the phone detects sounds such as doorbells or smoke alarms. The Sound Recognition tool in the Hearing section of the iPhone’s Accessibility settings does the same thing. Also, make sure you have multisensory notifications enabled on your phone, such as LED flash alerts or vibrating alerts, so you don’t miss anything.

The free Live Transcribe & Notification Android app from Google converts nearby speech to onscreen text and can also provide visual alerts when the phone detects sounds such as doorbells or smoke alarms. The Sound Recognition tool in the Hearing section of the iPhone’s Accessibility settings does the same thing. Also, make sure you have multisensory notifications enabled on your phone, such as LED flash alerts or vibrating alerts, so you don’t miss anything.

Views: 134